If you have your own community edition of a Ghost blog running, you might want to make sure your content is save in case anything ever goes sideways. Read on to learn how to do so with a Node.js service.

Table of contents

TL:DR: If you're after the source code, check out this Github repository

Ghost, like it's big PHP - powered brother Wordpress, is a content management system. While you can find a use case (and plugin) for almost anything in WP, Ghost's approach is lean, powered by Node.js, and targets a niche of users that focusses on content creation, while offering a great developer experience. I recently started to use it for blogging and really like the API it provides. That was my motivation to build up the following project.

Note: To follow along, you will need a dedicated instance of Ghost, including a domain and admin access. In case you don't have one but would still like to explore its functionality, there's a demo for the content endpoint. It's read-only, but it'll do. https://demo.ghost.io/ghost/api/v3/content/posts/?key=22444f78447824223cefc48062

Prerequisites

The minimum requirement for you to follow along is to have a working instance of Node.js installed on your machine. I'm using a Raspberry Pi 3+ to do those backups for me, you'll notice it by the path to which I am saving those backups, but any other computer would to fine as well. As stated, if you do not have your own Ghost instance, you can still follow along with the demo.

The ghost API in a nutshell

Ghost can be operated as a headless headless CMS by its REST admin-endpoint. While it also provides a read-only content-endpoint, the former includes a variety of methods to CRUD on your content, no matter what client you're operating with. It does, however, require a few simple steps to establish a communication.

Setup a new Ghost integration

If you are following along for the content-endpoint, skip this step.

- In your Ghost admin panel, go to Integrations>Add Custom Integration

- Give that integration a name and create it.

- When that's done, two API keys will be created. Make sure to never share either of these keys with anybody as they provide access to your Ghost platform without user credentials.

- If you're using the content endpoint, you can the content key right away as a url query parameter, as shown in the note above, to make GET requests like this:

# Make a curl GET request to the ghost demo endpoint in your terminal

$ curl https://demo.ghost.io/ghost/api/v3/content/posts/?key=22444f78447824223cefc48062

- In our case, we'll need to note down the admin key, as we'll use it later on for Json Webtoken authentication.

- Having done that, let us now move over to our IDE and start writing the logic.

Get started

Prepare the project structure

Change into a directory of your choice and initialize a new npm project:

$ cd /path/to/project

$ npm init -yHere, let us setup a basic structure for our app:

- Create two new directories.

Name one of them config, the other one services - In the config directory, create two files.

One named config.js, the other one util.js - In the services directory, create one file called backupContent.js

- In the root directory, create one file called index.js

After doing so, install the following npm packages:

- chalk for colorized console output

- isomorphic-fetch for http requests (alternative: node-fetch, axios)

- jsonwebtoken for authentication against the admin endpoint

- rimraf to savely delete old backups

npm i chalk isomorphic-fetch jsonwebtoken rimraf

Finally, let's add an npm script to run our code.

# Add to your package.json file

{

[...],

scripts: {

"start": "node index"

},

[...]

}

If everything went well, your project will now have the following structure and all relevant node modules isntalled - we're ready to move ahead and give life to it.

/

| - config

| | - config.js

| | - util.js

| - node_modules

| - services

| | - backupContent.js

| - index.js

Setup the configuration

Add the below code to your config.js file. The variables we define here hold API and backup configuration information and have the following purpose:

| Variable Name | Purpose |

|---|---|

| ghostApiUrl | The root path to your ghost admin-endpoint |

| ghostApiPaths | The paths from where to fetch backup data |

| ghostAdminKey | The integration admin key which you've noted down earlier |

| backupInterval | The interval which passes between updates in miliseconds |

| backupDirPath | The path on the backup machine where data are being stored |

| backupLifetime | For how many months to keep old updates |

| genBackupDirPath | A function to create a unique backup directory |

const { join } = require('path');

// Configure the relevant API params

const ghostApiUrl = 'https://<your-ghost-domain>/ghost/api/v3/admin';

// If you are following along with the demo, uncomment the following:

// const ghostApiUrl = 'https://demo.ghost.io/ghost/api/v3/content/'

// Also, make sure to append the query in the service that utilizes this parameter

const ghostApiPaths = ['posts', 'pages', 'site', 'users'];

const ghostAdminKey = '<your-admin-key>';

// Configure the backup settings

const backupInterval = 1000 /* Miliseconds */ * 60 /* Seconds */ * 60 /* Minutes */ * 24 /* Hours*/ * 1; /* Days */

const backupDirPath = '/home/pi/Backups';

const backupLifetime = 4; /* Months */

const genBackupDirPath = () => {

const date = new Date();

const year = date.getFullYear();

const month = (date.getMonth() + 1).toString().padStart(2, 0);

const day = date.getDate().toString().padStart(2, 0);

const hour = date.getHours().toString().padStart(2, 0);

const min = date.getMinutes().toString().padStart(2, 0);

const now = `${year}_${month}_${day}-${hour}_${min}`;

return join(backupDirPath, now);

};

module.exports = {

ghostApiUrl,

ghostApiPaths,

ghostAdminKey,

backupInterval,

backupLifetime,

backupDirPath,

genBackupDirPath,

};

Prepare the utility functions

There are two utilities we will need in our backup service:

- One to handle authentication. The Ghost admin-endpoint uses Json Webtoken authentication, and the admin key you received from your integration holds the validation signature.

- Another one to handle the deletion of older backups. It will be called once per backup cycle.

Add the following to your util.js file:

// Import the necessary functions

const { promises } = require('fs');

const { sign } = require('jsonwebtoken');

const rimraf = require('rimraf');

/**

* @desc Create the authorization header to authenticate against the

* Ghost admin-endpoint

*

* @param {String} ghostAdminKey

*

* @returns {Object} The headers to be appended to the http request

*/

function genAdminHeaders(ghostAdminKey) {

// Extract the secret from the Ghost admin key

const [id, secret] = ghostAdminKey.split(':');

// Create a token with an empty payload and encode it.

const token = sign({}, Buffer.from(secret, 'hex'), {

keyid: id,

algorithm: 'HS256',

expiresIn: '5m',

audience: '/v3/admin',

});

// Create the headers object that's added to the request

const headers = {Authorization: `Ghost ${token}`}

return headers;

}

/**

* @desc Delete backup directories that are older than the backupLifetime.

*

* @param {String} dirPath The path to where backups are being stored

* @param {Number} backupLifetime The amount of months a backup is to be stored

*

* @returns {Array} An array of paths that have been deleted

*/

async function deleteOldDirectories(dirPath, backupLifetime) {

const dir = await promises.opendir(dirPath);

let deletedBackups = [];

for await (const dirent of dir) {

const date = new Date();

const year = date.getFullYear();

const month = date.getMonth() + 1;

// For each backup entry, extract the year and month in which is was created

const createdYear = +dirent.name.split('_')[0];

const createdMonth = +dirent.name.split('_')[1];

// In case backup was made this year,

// check if createdMonth + lifetime are less than current month

if (createdYear === year) {

if (createdMonth - backupLifetime >= month) {

deletedBackups.push(dirent.name)

rimraf.sync(`${dirPath}/${dirent.name}`)

}

}

// In case backup was made last year,

// check if createdMonth + lifetime is smaller than 12

else {

if (createdMonth + backupLifetime <= 12) {

deletedBackups.push(dirent.name)

rimraf.sync(`${dirPath}/${dirent.name}`)

}

}

}

return deletedBackups;

}

module.exports = { getAdminHeaders, deleteOldDirectories };

Write the service

Add the following to your backupContent.js file. Consider the comments inside of the code, if you're curious to understand what's going on:

// Load relevant core package functions

const { join } = require('path');

const { mkdirSync, writeFileSync } = require('fs');

const { log } = require('console');

// Load npm modules

const fetch = require('isomorphic-fetch');

const { red, yellow, green } = require('chalk');

// Load config and utility functions

const { ghostApiUrl, ghostApiPaths, ghostAdminKey, backupDirPath, genBackupDirPath, backupLifetime } = require('../config/config');

const { genAdminHeaders, deleteOldDirectories } = require('../config/util');

// Main function for the backup service

async function runBackupContent() {

log(green(`⏳ Time to backup your content. Timestamp: ${new Date()}`));

try {

// Generate the backup directory and the authorization headers

const dir = genBackupDirPath();

const headers = genAdminHeaders(ghostAdminKey);

// e.g. /home/pi/Backups/2021_01_02-13_55/site.json

mkdirSync(dir);

// Check for old backups and clean them up

const deleted = deleteOldDirectories(backupDirPath, backupLifetime);

if (deleted.length > 0) {

deleted.forEach(deletedBackup => log(yellow(`☝ Deleted backup from ${deletedBackup}`)));

} else {

log(green('✅ No old backups to be deleted'));

}

// Make a backup for all the content specified in the api paths config

ghostApiPaths.forEach(async path => {

// Create the relevant variables

// The endpoint from where to receive backup data

// e.g. https://<your-ghost-domain>/ghost/api/v3/admin/posts

const url = `${ghostApiUrl}/${path}`;

// These options.headers will hold the Json Webtoken

// e.g. 'Authorization': 'Ghost eybf12bf.8712dh.128d7g12'

const options = { method: 'get', timeout: 1500, headers: { ...headers } };

// The name of the corresponding backup file in json

// e.g. posts.json

const filePath = join(dir, path + '.json');

const response = await fetch(url, options);

// If the http status is unexpected, log the error down in a separate file

if (response.status !== 200) {

const errPath = join(dir, path + 'error.json');

const data = await response.json();

writeFileSync(errPath, jsonStringify(data));

log(red(`❌ ${new Date()}: Something went wrong while trying to backup ${path}`));

// If the http status is returned as expected, write the result to a file

} else {

const data = await response.json();

writeFileSync(filePath, JSON.stringify(data));

log(green(`✅ Wrote backup for '${path}' - endpoint into ${filePath}`));

}

});

} catch (e) {

// Do appropriate error handling based on the response code

switch (e.code) {

// If, for some reason, two backups are made at the same time

case 'EEXIST':

log(red('❌ Tried to execute a backup for the same time period twice. Cancelling ... '));

break;

default:

log(red(e.message));

break;

}

}

}

module.exports = runBackupContent;

Wrap up and run the service

Let's briefly recap what we've achieved

- We created a service that makes backups of our Ghost blog on a regular basis.

- To do so, it uses Ghost's REST admin-endpoint and Json Webtoken authentication

- The backups are saved in a dedicated folder and backups older than 4 months are automatically deleted

Let's now import the service into our index.js file and run it

// Load node modules

const { log } = require('console');

const { green } = require('chalk');

// Load the backup interval from the configuration

const { backupInterval } = require('./config/config');

// Load the service

const runBackupContent = require('./services/backupContent');

// Start the service with the given interval

log(green(`⏳ Scheduled backup job with a ${backupInterval / (1000 * 60 * 60)} - hour interval`));

runBackupContent();

setInterval(() => runBackupContent(), backupInterval);

Then, in your console, run the start script

$ npm run start

# Or run index directly

$ node index

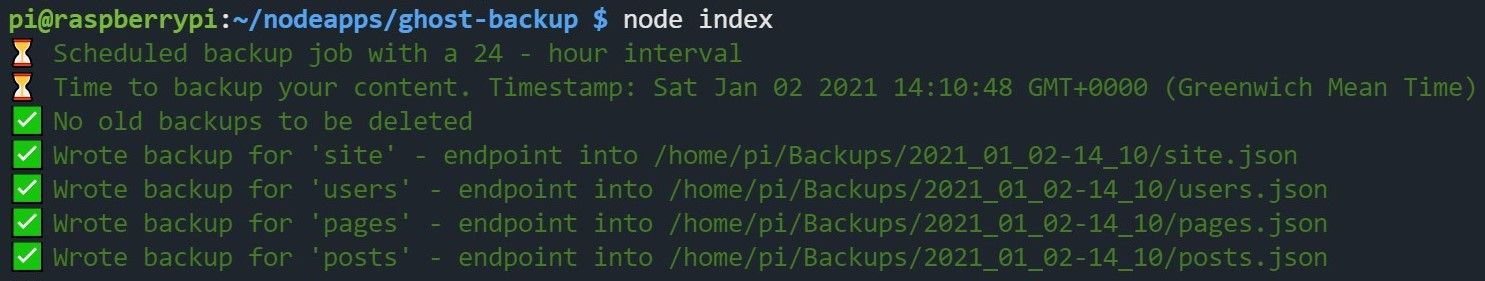

Assuming everything else went well, you will now see the following console output:

And there you have it: A basic - but working - backup service for your blogposts.

Next steps and more features

I could imagine to also include the following features:

- A service that automatically reads the latest update and sends several POST requests to the admin-endpoint ( one per path ) to restore lost pages and posts.

- Use the pm2 module to run and monitor this service.

- Put the configuration in a database or a .env variable and build a neat monitoring api.

- Use a telegram bot to send messages to your phone when a backup was successful and/or unsuccessful.